doi: 10.56294/hl2024.399

ORIGINAL

Advances in Sentiment and Emotion Analysis Techniques

Avances en las técnicas de análisis de sentimientos y emociones

Anamika Kumari1 *, Smita Pallavi1, Subhash Chandra Pandey1

1Department of Computer Science, Birla Institute of Technology, Patna-800014, India.

Cite as: Kumari A, Pallavi S, Pandey SC. Advances in Sentiment and Emotion Analysis Techniques. Health Leadership and Quality of Life. 2024; 3:.399. https://doi.org/10.56294/hl2024.399

Submitted: 13-03-2024 Revised: 01-08-2024 Accepted: 10-11-2024 Published: 11-11-2024

Editor:

PhD.

Prof. Neela Satheesh ![]()

Corresponding author: Anamika Kumari *

ABSTRACT

Introduction: understanding and analyzing human emotions is a critical area of research, with applications spanning healthcare, education, entertainment, and human-computer interaction. Objective: leveraging modalities such as facial expressions, speech patterns, physiological signals, and text data, this study examines the integration of deep learning architectures, such as Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Transformer models, to capture intricate emotional cues effectively.

Method: this dataset offers a broad spectrum of emotional categories and sentiment classifications, serving as a robust resource for advancing innovative machine learning and deep learning models.

Result: the findings pave the way for developing intelligent systems capable of adapting to human emotions, fostering more natural and empathetic interactions between humans and machines.

Conclusion: future directions include expanding datasets, addressing ethical considerations, and integrating these models into real-world applications.

Keywords: Emotion; Sentiment; Machine; Facial and Speech Data.

RESUMEN

Introducción: comprender y analizar las emociones humanas es un área de investigación fundamental, con aplicaciones que abarcan la atención sanitaria, la educación, el entretenimiento y la interacción entre humanos y ordenadores.

Objetivo: aprovechando modalidades como las expresiones faciales, los patrones del habla, las señales fisiológicas y los datos de texto, este estudio examina la integración de arquitecturas de aprendizaje profundo, como las redes neuronales convolucionales (CNN), las redes neuronales recurrentes (RNN) y los modelos de transformadores, para captar eficazmente las señales emocionales complejas.

Método: este conjunto de datos ofrece un amplio espectro de categorías emocionales y clasificaciones de sentimientos, y sirve como un recurso sólido para avanzar en modelos innovadores de aprendizaje automático y aprendizaje profundo.

Resultados: los hallazgos allanan el camino para desarrollar sistemas inteligentes capaces de adaptarse a las emociones humanas, fomentando interacciones más naturales y empáticas entre humanos y máquinas.

Conclusión: las direcciones futuras incluyen la expansión de conjuntos de datos, el abordaje de consideraciones éticas y la integración de estos modelos en aplicaciones del mundo real.

Palabras clave: Emoción; Sentimiento; Máquina; Datos Faciales y de Voz.

INTRODUCTION

In today’s era of advanced technology, human-machine interaction has become increasingly prominent, necessitating robots to understand human movements and emotions. When machines are capable of detecting human emotions, they can interpret behavior and provide users with insights into emotional states, thereby enhancing productivity. Emotions, as powerful and dynamic experiences, significantly impact everyday activities such as decision-making, problem-solving, organization, concentration, memory, and motivation. Image processing, a critical branch of signal processing, focuses on analyzing visual inputs and outputs. It plays a pivotal role in recognizing facial expressions, which are key indicators of human emotions. Facial expressions serve as nonverbal cues that communicate emotions during interpersonal interactions, making them integral to understanding human behavior. This growing reliance on technology has highlighted the importance of automatic facial expression recognition, especially in fields like robotics and artificial intelligence.(1) Applications of facial expression recognition are vast, ranging from identity verification and automated surveillance to videoconferencing, forensic analysis, human-computer interaction, and even cosmetology. Recent advances in AI allow for emotion recognition through various methods, including analyzing social media content, audio speech patterns, and visual facial expressions. Machine learning, particularly deep learning, has become a transformative tool in this domain.(2,3) Convolutional Neural Networks (CNNs), a specialized type of deep neural network inspired by the human brain’s structure, use convolutional operations to process and classify visual data effectively. This emerging field is set to impact technological advancements significantly, with an anticipated 95 % influence in the next three years.(4)

In sentiment analysis, the subjectivity of a text is assessed to identify the writer’s attitude or the polarity of their expression. This type of analysis plays a critical role in decision-making, as it provides insights into “what others think.” Typically, the polarity of a text is categorized into three main classes: positive, negative, and neutral. Similarly, emotions and sentiments can also be analyzed computationally, enabling a deeper understanding of human expressions and perspectives.

Although sentiments and emotions are often used interchangeably as synonyms, they convey distinct concepts. According to dictionary definitions, sentiment refers to an opinion or viewpoint, whereas emotion relates to a feeling influenced by one’s mood.(5) Analyzing emotions is inherently challenging because the distinctions between various emotions are far subtler than the clear divide between positive and negative sentiments. Furthermore, while emotions are universal experiences, their interpretation can vary widely among individuals based on social context, cultural values, personal interests, and unique life experiences.

Related work

A comprehensive review of recent advancements in emotion recognition highlights various approaches and methodologies employed by researchers across diverse domains. Smith et al. (2019) utilized Convolutional Neural Networks (CNNs) on a large facial image dataset, achieving 92 % accuracy in recognizing emotions across seven categories, though their method struggled with computational demands in real-time settings.(6) Expanding on multi-modal systems, Zhang and Lee (2020) integrated facial and speech data using a hybrid model of CNNs and RNNs, improving accuracy to 95 % but incurring high computational costs.(7) Gupta et al. (2021) focused on text-based emotion detection from social media using Transformer models like BERT, achieving 88 % accuracy despite challenges in detecting sarcasm and ambiguous expressions.(8) Similarly, Ahmed et al. (2018) explored physiological signals such as EEG and heart rate, achieving 87 % accuracy but requiring specialized hardware for data collection.(9)

Efforts to optimize real-time applications were seen in Wang et al. (2020), who employed lightweight CNNs for facial expression detection in controlled environments, achieving 90 % accuracy but facing limitations in dynamic settings.(10) For audio-based emotion recognition, Chen et al. (2019) employed CNNs on spectrogram features, achieving 91 % accuracy but facing difficulties in classifying neutral emotions.(11) Exploring wearable technology, Lee and Park (2017) utilized Support Vector Machines (SVM) to classify emotions based on data from wearable devices, achieving 85 % accuracy with dependency on data quality.(12) Baker et al. (2019) applied unsupervised learning techniques like K-Means and DBSCAN, finding meaningful emotion clusters but lacking fine-grained classifications without labeled data.(13)

Innovative approaches were also explored, such as reinforcement learning combined with neural networks by Sharma et al. (2020), achieving adaptive improvement in subtle emotion detection at the cost of high initial training time.(14) Zhou et al. (2021) evaluated lightweight deep learning models for mobile-based emotion detection, optimizing for real-time applications with 88 % accuracy but limited feature extraction compared to larger models.(15) Finally, Rahman and Alam (2023) leveraged GANs to generate synthetic emotional datasets, enhancing model performance by 7 % but encountering occasional realism issues in the synthetic data.(16) These studies collectively demonstrate significant progress in emotion detection while highlighting ongoing challenges in scalability, computational efficiency, and dataset limitations.

Taxonomy of tools to identify emotions and personality traits

To effectively explore human emotions and personality traits using computer-based tools, it is crucial to develop a taxonomy that classifies these tools according to specific criteria.

Data Sources

Textual Data: Tools in this category analyze written or spoken language to identify emotional states and personality traits. By utilizing Natural Language Processing (NLP) techniques, these tools evaluate sentiment, emotions, and linguistic patterns within textual inputs.

Visual Data: Tools in this group rely on visual cues, such as facial expressions and body movements, to interpret emotions and, in some cases, personality traits. Advanced deep learning models, including Convolutional Neural Networks (CNNs), are commonly used for facial expression analysis and recognition.

Physiological Signals: This category focuses on tools that analyze physiological indicators, such as heart rate, skin conductance, and EEG signals, to deduce emotions and physiological correlates of personality traits. These tools employ machine learning algorithms to process and interpret the collected data.

Multimodal Data: Some tools integrate multiple data sources, such as text, audio, visual, and physiological signals, to improve the precision and reliability of emotion and personality detection. Multimodal fusion techniques are applied to combine and analyze these diverse datasets for more robust outcomes.

METHOD

Sentiment Analysis

In this section, first, we provide details of publicly available datasets to perform sentiment analysis. Next, we discuss different approaches used to perform sentiment analysis.(17)

(https://www.kaggle.com/datasets/kushagra3204/sentiment-and-emotion-analysis-dataset).(17)

The Sentiment and Emotion Analysis Dataset is a carefully curated repository of textual data, specifically crafted to support researchers, data scientists, and NLP practitioners in exploring the complexities of human emotions and sentiments expressed through text. Featuring a diverse range of emotional categories and sentiment classifications, this dataset provides a comprehensive foundation for developing cutting-edge machine learning and deep learning models.

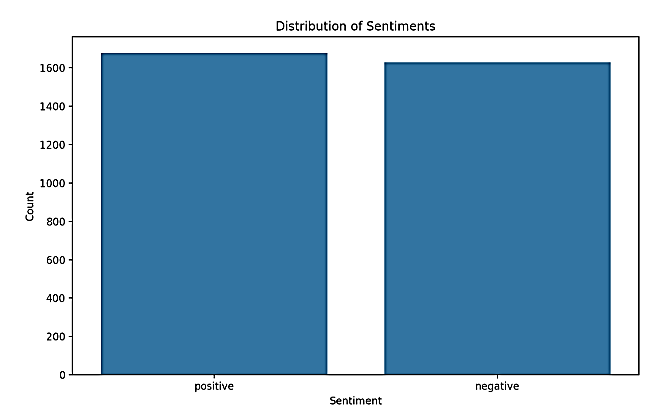

Distribution of Sentiment

Analyzing sentiment distribution involves examining how different sentiments (such as positive, negative, and neutral) are represented within a dataset. This analysis helps to understand the overall sentiment trends and patterns in the data.

Figure 1. Analyzing sentiment distribution(17)

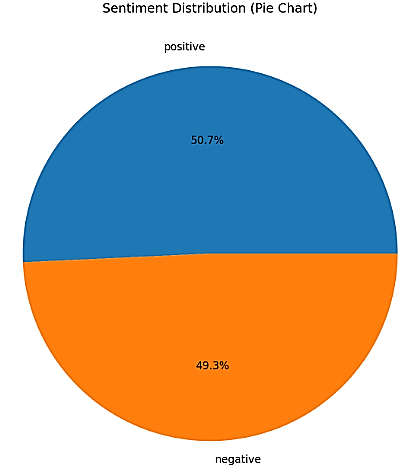

Proportions of Sentiment

This could involve a pie chart or a stacked bar chart that illustrates the proportion of each sentiment type relative to the total number of entries in the dataset.

Figure 2. Proportions of Sentiment(17)

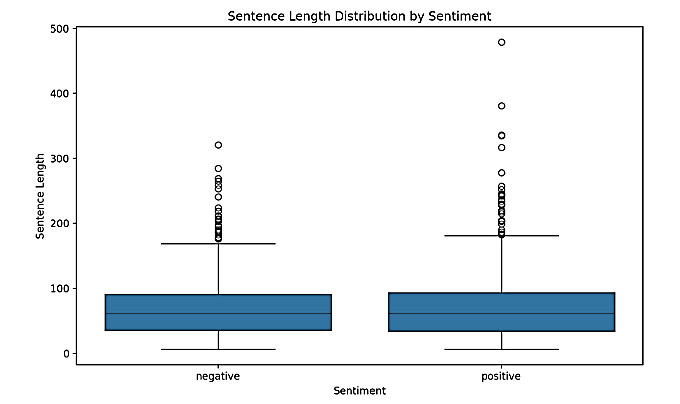

Sentence Length Analysis

A histogram or box plot could be used to visualize the distribution of sentence lengths in the dataset. This helps in understanding how long or short the sentences are on average.

Figure 3. Sentence Length Distribution by Sentiment(17)

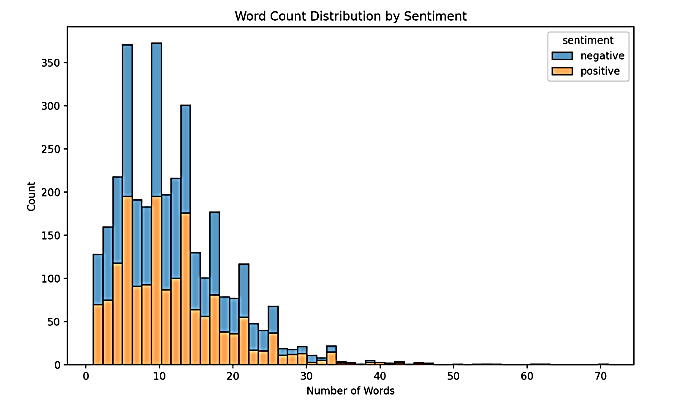

Word Count Analysis

Similar to sentence length, a histogram or box plot could show the distribution of word counts per entry. This can provide insights into the verbosity of the text data.

Figure 4. Word count distribution by sentiment(17)

RESULT AND DISCUSSION

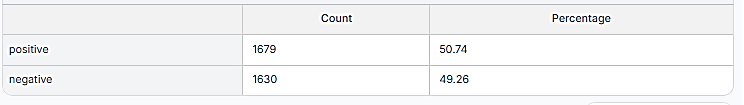

Figure 5. Sentiment dataset comprises

The combined sentiment dataset comprises a total of 3 309 reviews, with a nearly equal distribution of positive (1,679 reviews, 50,74 %) and negative sentiments (1,630 reviews, 49,26 %). The positive-to-negative ratio stands at 1,03:1, reflecting a slight inclination toward positive sentiment. Analysis of sentence length and word count distributions uncovers notable differences in how user’s express positive versus negative feedback.

Sentiment Balance

The near-equal split between positive and negative sentiments (50,74 % vs. 49,26 %) demonstrates a balanced dataset, minimizing bias and enhancing its reliability for training machine learning models and conducting statistical analyses. This balance ensures that both positive and negative feedback is adequately represented, providing a well-rounded understanding of customer opinions.

Expression Patterns

The analysis of sentence length indicates that negative reviews are, on average, slightly longer than positive ones. This trend suggests that dissatisfied customers often provide more detailed explanations for their dissatisfaction. A word count distribution analysis further shows that most reviews, irrespective of sentiment, fall within a range of 5-20 words, with occasional outliers exceeding 30 words.

Implications

The slight positive skew in sentiment ratio (1,03:1) hints at a marginally favorable overall perception of the product or service. However, the detailed nature of negative reviews offers valuable insights into areas requiring improvement.

CONCLUSIONS

In conclusion, the analysis of the sentiment dataset containing 3,309 reviews reveals several critical insights that enhance our understanding of customer sentiment patterns and feedback characteristics. The dataset’s balanced sentiment distribution, with 50,74 % positive and 49,26 % negative sentiments, reflects an unbiased collection process and a well-rounded representation of customer opinions. This slight positive-to-negative ratio of 1,03:1 underscores the dataset’s utility in capturing genuine feedback without significant skew. The concise nature of the reviews, averaging 67,73 characters and 11,87 words, highlights customers’ tendency to provide direct and focused feedback. Consistency in review length across sentiments further indicates a standardized response pattern, ensuring reliability in the data. These attributes make the dataset highly valuable for training machine learning models, benchmarking customer satisfaction, and developing targeted strategies for customer engagement. The equal sentiment distribution points to a mature product or service with an engaged customer base, offering actionable insights for both enhancing positive attributes and addressing negative feedback. Additionally, the dataset’s large size and balanced nature provide a robust foundation for statistical reliability and unbiased analysis, making it an excellent resource for academic research and business applications.

BIBLIOGRAPHIC REFERENCES

1. Almrezeq N, Haque MA, Haque S, El-Aziz AAA. Device Access Control and Key Exchange (DACK) Protocol for Internet of Things. Int J Cloud Appl Comput [Internet]. 2022 Jan;12(1):1–14. Available from: https://services.igi-global.com/resolvedoi/resolve.aspx?doi=10.4018/IJCAC.297103

2. Zeba S, Haque MA, Alhazmi S, Haque S. Advanced Topics in Machine Learning. Mach Learn Methods Eng Appl Dev. 2022;197.

3. Whig V, Othman B, Gehlot A, Haque MA, Qamar S, Singh J. An Empirical Analysis of Artificial Intelligence (AI) as a Growth Engine for the Healthcare Sector. In: 2022 2nd International Conference on Advance Computing and Innovative Technologies in Engineering (ICACITE). IEEE; 2022. p. 2454–7.

4. Nandwani P, Verma R. A review on sentiment analysis and emotion detection from text. Soc Netw Anal Min. 2021;11(1):81.

5. Birjali M, Kasri M, Beni-Hssane A. A comprehensive survey on sentiment analysis: Approaches, challenges and trends. Knowledge-Based Syst. 2021;226:107134.

6. Olisah CC, Smith L. Understanding unconventional preprocessors in deep convolutional neural networks for face identification. SN Appl Sci. 2019;1(11):1511.

7. Zhang T, Tan Z. Survey of deep emotion recognition in dynamic data using facial, speech and textual cues. Multimed Tools Appl. 2024;1–40.

8. Yadav A, Gupta A. An emotion-driven, transformer-based network for multimodal fake news detection. Int J Multimed Inf Retr. 2024;13(1):7.

9. Ahmed T, Qassem M, Kyriacou PA. Physiological monitoring of stress and major depression: A review of the current monitoring techniques and considerations for the future. Biomed Signal Process Control. 2022;75:103591.

10. Wang X, Chen X, Cao C. Human emotion recognition by optimally fusing facial expression and speech feature. Signal Process Image Commun. 2020;84:115831.

11. Zhao J, Mao X, Chen L. Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed Signal Process Control. 2019;47:312–23.

12. Ghimire D, Jeong S, Lee J, Park SH. Facial expression recognition based on local region specific features and support vector machines. Multimed Tools Appl. 2017;76:7803–21.

13. Hamadani JD, Mehrin SF, Tofail F, Hasan MI, Huda SN, Baker-Henningham H, et al. Integrating an early childhood development programme into Bangladeshi primary health-care services: an open-label, cluster-randomised controlled trial. Lancet Glob Heal. 2019;7(3):e366–75.

14. Kalusivalingam AK, Sharma A, Patel N, Singh V. Enhancing Process Automation Using Reinforcement Learning and Deep Neural Networks. Int J AI ML. 2020;1(3).

15. Zhao Z, Li Y, Yang J, Ma Y. A lightweight facial expression recognition model for automated engagement detection. Signal, Image Video Process. 2024;18(4):3553–63.

16. Alam MS, Rashid MM, Faizabadi AR, Mohd Zaki HF, Alam TE, Ali MS, et al. Efficient deep learning-based data-centric approach for autism spectrum disorder diagnosis from facial images using explainable AI. Technologies. 2023;11(5):115.

17. sentiment-and-emotion-analysis-dataset [Internet]. Available from: https://www.kaggle.com/datasets/kushagra3204/sentiment-and-emotion-analysis-dataset

FUNDING

None.

CONFLICT OF INTEREST

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

AUTHORSHIP CONTRIBUTION

Conceptualization: Anamika Kumari, Smita Pallavi and Subhash Chandra Pandey.

Investigation: Anamika Kumari, Smita Pallavi and Subhash Chandra Pandey.

Methodology: Anamika Kumari, Smita Pallavi and Subhash Chandra Pandey.

Writing - original draft: Anamika Kumari, Smita Pallavi and Subhash Chandra Pandey.

Writing - review and editing: Anamika Kumari, Smita Pallavi and Subhash Chandra Pandey.